I have been a Puppet user for a couple of years now, first at work, and

eventually for my personal servers and computers. Although it can have a steep

learning curve, I find Puppet both nimble and very powerful. I also prefer it

to Ansible for its speed and the agent-server model it uses.

Sadly, Puppet Labs hasn't been the most supportive upstream and tends to move

pretty fast. Major versions rarely last for a whole Debian Stable release and

the upstream

.deb packages are full of vendored libraries.

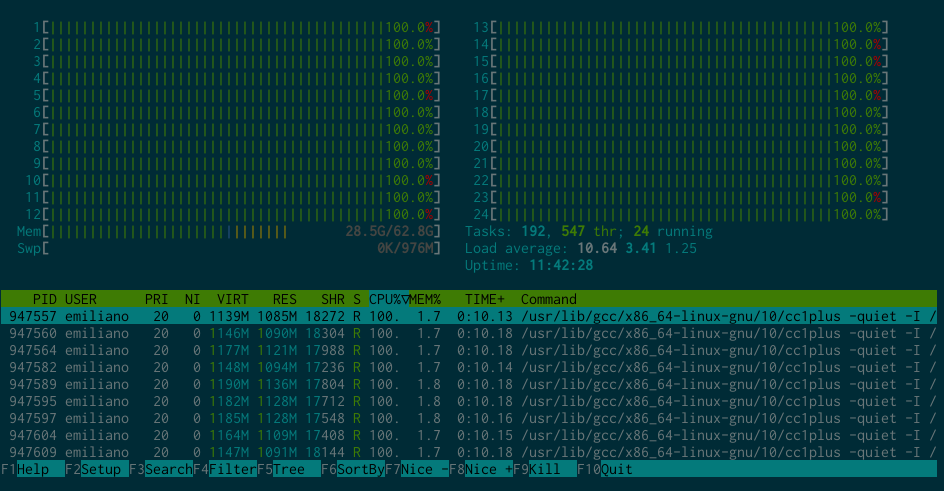

Since 2017, Apollon Oikonomopoulos has been the one doing most of the work on

Puppet in Debian. Sadly, he's had less time for that lately and with Puppet 5

being deprecated in January 2021, Thomas Goirand, Utkarsh Gupta and I have been

trying to package Puppet 6 in Debian for the last 6 months.

With Puppet 6, the old ruby Puppet server using Passenger is not supported

anymore and has been replaced by

puppetserver, written in Clojure and running

on the JVM. That's quite a large change and although

puppetserver does reuse

some of the Clojure libraries

puppetdb (already in Debian) uses, packaging it

meant quite a lot of work.

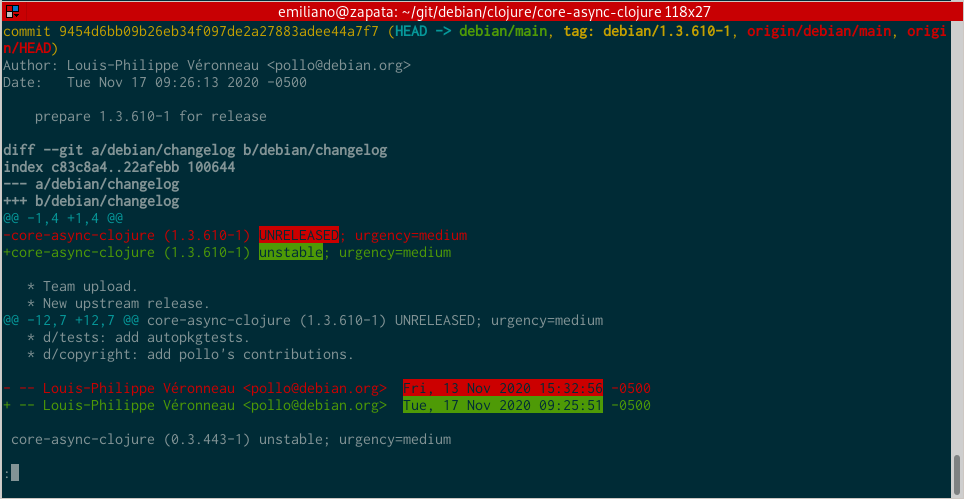

Work in the Clojure team

As part of my efforts to package

puppetserver, I had the pleasure to join the

Clojure team and learn a lot about the Clojure ecosystem.

As I mentioned earlier, a lot of the Clojure dependencies needed for

puppetserver were already in the archive. Unfortunately, when Apollon

Oikonomopoulos packaged them, the

leiningen build tool hadn't been packaged

yet. This meant I had to rebuild a lot of packages, on top of packaging some

new ones.

Since then, thanks to the efforts of Elana Hashman,

leiningen has been

packaged and lets us run the upstream testsuites and create

.jar artifacts

closer to those upstream releases.

During my work on

puppetserver, I worked on the following packages:

List of packages

backport9bidi-clojureclj-digest-clojureclj-helperclj-time-clojureclj-yaml-clojurecljx-clojurecore-async-clojurecore-cache-clojurecore-match-clojurecpath-clojurecrypto-equality-clojurecrypto-random-clojuredata-csv-clojuredata-json-clojuredata-priority-map-clojurejava-classpath-clojurejnr-constantsjnr-enxiojrubyjruby-utils-clojurekitchensink-clojurelazymap-clojureliberator-clojureordered-clojurepathetic-clojurepotemkin-clojureprismatic-plumbing-clojureprismatic-schema-clojurepuppetlabs-http-client-clojurepuppetlabs-i18n-clojurepuppetlabs-ring-middleware-clojurepuppetserverraynes-fs-clojureriddley-clojurering-basic-authentication-clojurering-clojurering-codec-clojureshell-utils-clojuressl-utils-clojuretest-check-clojuretools-analyzer-clojuretools-analyzer-jvm-clojuretools-cli-clojuretools-reader-clojuretrapperkeeper-authorization-clojuretrapperkeeper-clojuretrapperkeeper-filesystem-watcher-clojuretrapperkeeper-metrics-clojuretrapperkeeper-scheduler-clojuretrapperkeeper-webserver-jetty9-clojureurl-clojureuseful-clojurewatchtower-clojure

If you want to learn more about packaging Clojure libraries and applications,

I rewrote the Debian Clojure

packaging tutorial and added a section

about the quirks of using

leiningen without a dedicated

dh_lein tool.

Work left to get puppetserver 6 in the archive

Unfortunately, I was not able to finish the

puppetserver 6 packaging work.

It is thus unlikely it will make it in Debian Bullseye. If the issues described

below are fixed, it would be possible to to package

puppetserver in

bullseye-backports though.

So what's left?

jruby

Although I tried my best (kudos to Utkarsh Gupta and Thomas Goirand for the

help),

jruby in Debian is still broken. It does build properly, but the

testsuite fails with multiple errors:

ruby-psych is broken (#959571)- there are some random java failures on a few tests (no clue why)

- tests ran by

raklelib/rspec.rake fail to run, maybe because the --pattern

command line option isn't compatible with our version of rake? Utkarsh seemed

to know why this happens.

jruby testsuite failures aside, I have not been able to use the

jruby.deb the

package currently builds in

jruby-utils-clojure (testsuite failure). I had the

same exact failure with the (more broken)

jruby version that is currently in

the archive, which leads me to think this is a

LOAD_PATH issue in

jruby-utils-clojure. More on that below.

To try to bypass these issues, I

tried to vendor jruby into

jruby-utils-clojure. At first I understood

vendoring meant including

upstream pre-built artifacts (

jruby-complete.jar) and shipping them directly.

After talking with people on the

#debian-mentors and

#debian-ftp IRC

channels, I now understand why this isn't a good idea (and why it's not

permitted in Debian). Many thanks to the people who were patient and kind enough

to discuss this with me and give me alternatives.

As far as I now understand it,

vendoring in Debian means "to have an embedded

copy of the source code in another package". Code shipped that way still needs

to be built from source. This means we need to build

jruby ourselves, one way

or another.

Vendoring jruby in another package thus isn't terribly helpful.

If fixing

jruby the proper way isn't possible, I would suggest trying to

build the package using embedded code copies of the external libraries

jruby

needs to build, instead of trying to use the Debian libraries.

This

should make it easier to replicate what upstream does and to have a final

.jar that can be used.

jruby-utils-clojure

This package is a first-level dependency for

puppetserver and is the glue

between

jruby and

puppetserver.

It builds fine, but the testsuite fails when using the Debian

jruby package. I

think the problem is caused by a

jruby LOAD_PATH issue.

The Debian

jruby package plays with the

LOAD_PATH a little to try use

Debian packages instead of downloading gems from the web, as upstream

jruby

does. This seems to clash with the

gem-home,

gem-path, and

jruby-load-path variables in the

jruby-utils-clojure package. The testsuite

plays around with these variables and some Ruby libraries can't be found.

I tried to fix this, but failed. Using the upstream

jruby-complete.jar instead

of the Debian

jruby package, the testsuite passes fine.

This package could clearly be uploaded to NEW right now by ignoring the

testsuite failures (we're just packaging static

.clj source files in the

proper location in a

.jar).

puppetserver

jruby issues aside, packaging puppetserver itself is 80% done. Using the

upstream

jruby-complete.jar artifact, the testsuite fails with a weird

Clojure error I'm not sure I understand, but I haven't debugged it for very

long.

Upstream uses git submodules to vendor puppet (agent), hiera (3), facter and

puppet-resource-api for the testsuite to run properly. I haven't touched that,

but I believe we can either:

- link to the Debian packages

- fix the Debian packages if they don't include the right files (maybe in a new

binary package that just ships part of the source code?)

Without the testsuite actually running, it's hard to know what files are needed

in those packages.

What now

Puppet 5 is now deprecated.

If you or your organisation cares about Puppet in Debian,

puppetserver

really isn't far away from making it in the archive.

Very talented Debian Developers are always eager to work on these issues and

can be contracted for very reasonable rates. If you're interested in

contracting someone to help iron out the last issues, don't hesitate to reach

out via one of the following:

As for I, I'm happy to say I got a new contract and will go back to teaching

Economics for the Winter 2021 session. I might help out with some general Debian

packaging work from time to time, but it'll be as a hobby instead of a job.

Thanks

The work I did during the last 6 weeks would be not have been possible without

the support of the Wikimedia Foundation, who were gracious enough to contract

me. My particular thanks to Faidon Liambotis, Moritz M hlenhoff and John Bond.

Many, many thanks to Rob Browning, Thomas Goirand, Elana Hashman, Utkarsh Gupta

and Apollon Oikonomopoulos for their direct and indirect help, without which

all of this wouldn't have been possible.

I've been teaching economics for a few semesters already and, slowly but

surely, I'm starting to get the hang of it. Having to deal with teaching

remotely hasn't been easy though and I'm really hoping the winter semester will

be in-person again.

Although I worked way too much last semester1, I somehow managed to

transition to using a graphics tablet. I bought a Wacom Intuos S tablet (model

CTL-4100) in late August 2021 and overall, I have been very happy with it.

Wacom Canada offers a small discount for teachers and I ended up paying 115 CAD

(~90 USD) for the tablet, an overall very reasonable price.

Unsurprisingly, the Wacom support on Linux is very good and my tablet worked

out of the box. The only real problem I had was by default, the tablet

sometimes boots up in Android mode, making it unusable. This is easily solved

by pressing down on the pad's first and last buttons for a few seconds, until

the LED turns white.

The included stylus came with hard plastic nibs, but I find them too slippery.

I eventually purchased hard felt nibs, which increase the friction and makes

for a more paper-like experience. They are a little less durable, but I wrote

quite a fair bit and still haven't gone through a single one yet.

Learning curve

Learning how to use a graphical tablet took me at least a few weeks! When

writing on a sheet of paper, the eyes see what the hand writes directly. This

is not the case when using a graphical tablet: you are writing on a surface and

see the result on your screen, a completely different surface. This dissociation

takes a bit of practise to master, but after going through more than 300 pages

of notes, it now feels perfectly normal.

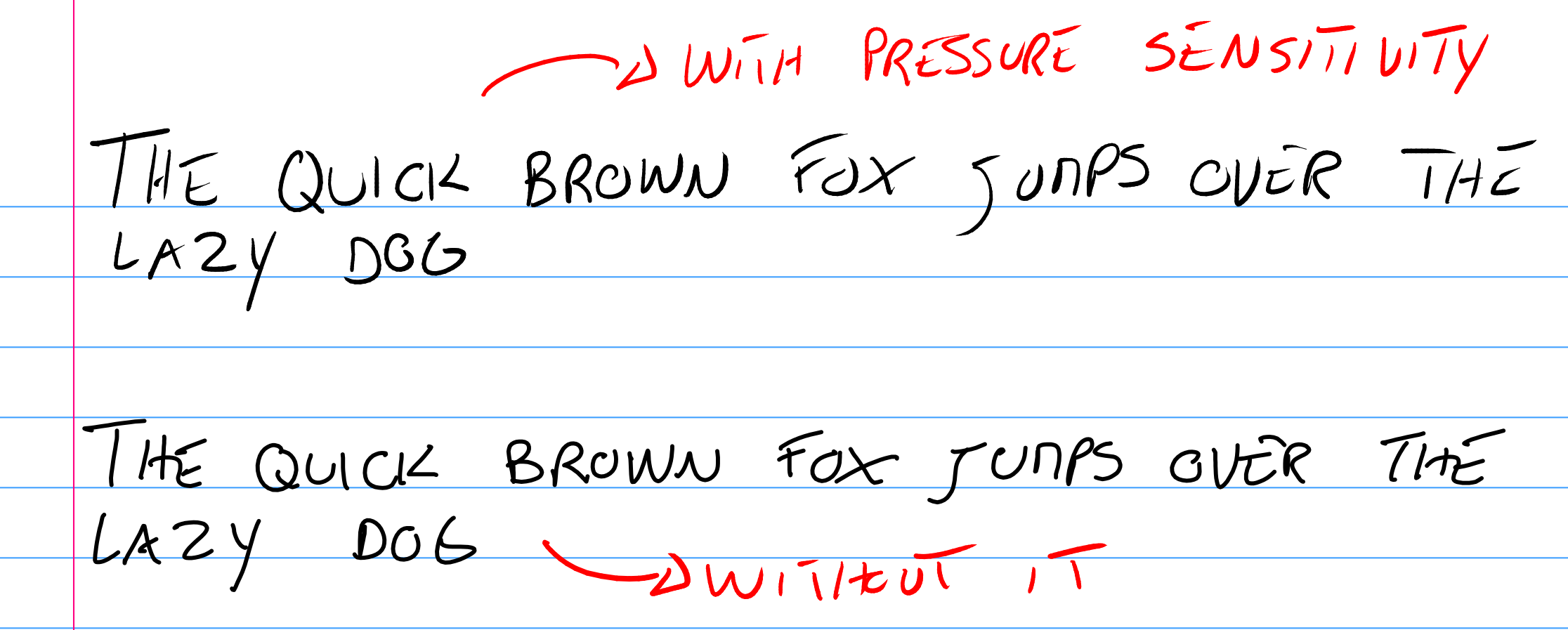

Here is a side-by-side comparison of my very average hand-writing2:

I've been teaching economics for a few semesters already and, slowly but

surely, I'm starting to get the hang of it. Having to deal with teaching

remotely hasn't been easy though and I'm really hoping the winter semester will

be in-person again.

Although I worked way too much last semester1, I somehow managed to

transition to using a graphics tablet. I bought a Wacom Intuos S tablet (model

CTL-4100) in late August 2021 and overall, I have been very happy with it.

Wacom Canada offers a small discount for teachers and I ended up paying 115 CAD

(~90 USD) for the tablet, an overall very reasonable price.

Unsurprisingly, the Wacom support on Linux is very good and my tablet worked

out of the box. The only real problem I had was by default, the tablet

sometimes boots up in Android mode, making it unusable. This is easily solved

by pressing down on the pad's first and last buttons for a few seconds, until

the LED turns white.

The included stylus came with hard plastic nibs, but I find them too slippery.

I eventually purchased hard felt nibs, which increase the friction and makes

for a more paper-like experience. They are a little less durable, but I wrote

quite a fair bit and still haven't gone through a single one yet.

Learning curve

Learning how to use a graphical tablet took me at least a few weeks! When

writing on a sheet of paper, the eyes see what the hand writes directly. This

is not the case when using a graphical tablet: you are writing on a surface and

see the result on your screen, a completely different surface. This dissociation

takes a bit of practise to master, but after going through more than 300 pages

of notes, it now feels perfectly normal.

Here is a side-by-side comparison of my very average hand-writing2:

I still prefer the result of writing on paper, but I think this is mostly due to

me not using the pressure sensitivity feature. The support in

I still prefer the result of writing on paper, but I think this is mostly due to

me not using the pressure sensitivity feature. The support in  Use case

The first use case I have for the tablet is grading papers. I've been asking my

students to submit their papers via Moodle for a few semesters already, but

until now, I was grading them using PDF comments. The experience wasn't

great3 and was rather slow compared to grading physical copies.

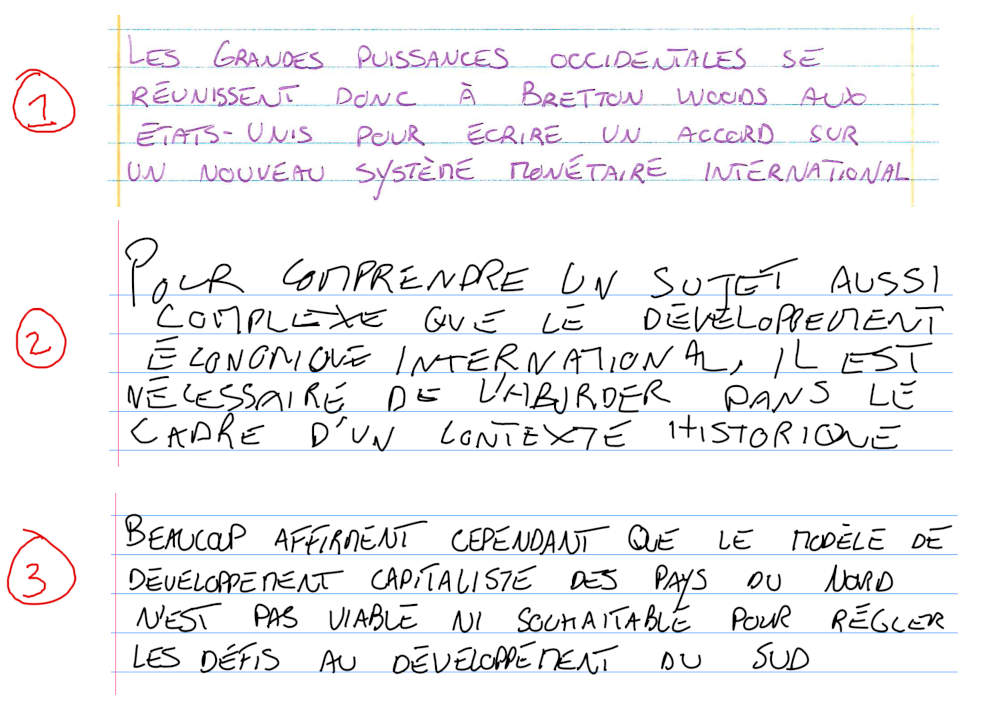

I'm also a somewhat old-school teacher: I refuse to teach using slides. Death

by PowerPoint is real. I write on the blackboard a lot4 and I find

it much easier to prepare my notes by hand than by typing them, as the end

result is closer to what I actually end up writing down on the board.

Writing notes by hand on sheets of paper is a chore too, especially when you

revisit the same materiel regularly. Being able to handwrite digital notes gives

me a lot more flexibility and it's been great.

So far, I have been using

Use case

The first use case I have for the tablet is grading papers. I've been asking my

students to submit their papers via Moodle for a few semesters already, but

until now, I was grading them using PDF comments. The experience wasn't

great3 and was rather slow compared to grading physical copies.

I'm also a somewhat old-school teacher: I refuse to teach using slides. Death

by PowerPoint is real. I write on the blackboard a lot4 and I find

it much easier to prepare my notes by hand than by typing them, as the end

result is closer to what I actually end up writing down on the board.

Writing notes by hand on sheets of paper is a chore too, especially when you

revisit the same materiel regularly. Being able to handwrite digital notes gives

me a lot more flexibility and it's been great.

So far, I have been using  As the years passed, chip size decreased, battery capacity improved and machine

learning blossomed truly a perfect storm for the wireless ANC headphones

market. I had mostly stayed a sceptic of this tech until recently a kind friend

offered to let me try a pair of Sony WH-1000X M3.

Having tested them thoroughly, I have to say I'm really tempted to buy them

from him, as they truly are fantastic headphones

As the years passed, chip size decreased, battery capacity improved and machine

learning blossomed truly a perfect storm for the wireless ANC headphones

market. I had mostly stayed a sceptic of this tech until recently a kind friend

offered to let me try a pair of Sony WH-1000X M3.

Having tested them thoroughly, I have to say I'm really tempted to buy them

from him, as they truly are fantastic headphones I won't be keeping them though.

Whilst I really like what Sony has achieved here, I've grown to understand ANC

simply isn't for me. Some of the drawbacks of ANC somewhat bother me: the ear

pressure it creates is tolerable, but is an additional energy drain over long

periods of time and eventually gives me headaches. I've also found ANC

accentuates the motion sickness I suffer from, probably because it messes up

with some part of the inner ear balance system.

Most of all, I found that it didn't provide noticeable improvements over good

passive noise cancellation solutions, at least in terms of how high I have to

turn the volume up to hear music or podcasts clearly. The human brain works in

mysterious ways and it seems ANC cancelling a class of noises (low hums,

constant noises, etc.) makes other noises so much more noticeable. People

talking or bursty high pitched noises bothered me much more with ANC on than

without.

So for now, I'll keep using my trusty Senheiser HD 280 Pro

I won't be keeping them though.

Whilst I really like what Sony has achieved here, I've grown to understand ANC

simply isn't for me. Some of the drawbacks of ANC somewhat bother me: the ear

pressure it creates is tolerable, but is an additional energy drain over long

periods of time and eventually gives me headaches. I've also found ANC

accentuates the motion sickness I suffer from, probably because it messes up

with some part of the inner ear balance system.

Most of all, I found that it didn't provide noticeable improvements over good

passive noise cancellation solutions, at least in terms of how high I have to

turn the volume up to hear music or podcasts clearly. The human brain works in

mysterious ways and it seems ANC cancelling a class of noises (low hums,

constant noises, etc.) makes other noises so much more noticeable. People

talking or bursty high pitched noises bothered me much more with ANC on than

without.

So for now, I'll keep using my trusty Senheiser HD 280 Pro I'm sad to see this

I'm sad to see this  Summer has just started and I'm already looking forward to winter :)

Summer has just started and I'm already looking forward to winter :)

Luckily, on the screen you do have the option to reboot or power off. I did a reboot and lo, behold the system was able to input characters again. And this has happened time and again. I tried to find GOK and failed to remember that GOK had been

Luckily, on the screen you do have the option to reboot or power off. I did a reboot and lo, behold the system was able to input characters again. And this has happened time and again. I tried to find GOK and failed to remember that GOK had been  I even tried the same with xvkbd but no avail. I do use mate as my desktop-manager so maybe the instructions need some refinement ????

$ cat /etc/lightdm/lightdm-gtk-greeter.conf grep keyboard

I even tried the same with xvkbd but no avail. I do use mate as my desktop-manager so maybe the instructions need some refinement ????

$ cat /etc/lightdm/lightdm-gtk-greeter.conf grep keyboard

We fixed a couple of

We fixed a couple of

Sadly, even though

Sadly, even though  Contrary to what I was expecting, the book feels more like an extension of the

LCA keynote I previously mentioned than Roads and Bridges. Indeed, as made

apparent by the following quote, Eghbal doesn't believe funding to be the

primary problem of FOSS anymore:

Contrary to what I was expecting, the book feels more like an extension of the

LCA keynote I previously mentioned than Roads and Bridges. Indeed, as made

apparent by the following quote, Eghbal doesn't believe funding to be the

primary problem of FOSS anymore: